I wanted to write some posts about refactoring ARTv2 as I go through it. Personally, I’ve learned a lot developing these tools over the last few years. When I started writing these tools, I had a very different outlook on writing code. This had a lot to do with the incredibly fast-paced production environment I was in. I definitely looked at code as a means to an end, and if it “worked”, it was done.

Depending on the tool or the scope of the tool, this might be fine. When I start thinking about our industry though, where most of us are working on games that are considered services, a successful game (League of Legends, Fortnite, World of Warcraft, etc) could span 10+ years. And when you start thinking about the tools and pipeline you are using now, and being stuck with it in 10+ years because your project is still successful, you’ll probably wish you would have put more effort and thought into your code.

The neat thing about where ARTv2 is now, is that it is much easier to look at the big picture and see where things can be fixed and cleaned up. When I first started writing it, I didn’t really have a big picture in mind. I’d develop a feature, then think of the next feature, and develop it. This led to lots of giant files with lots of duplication. So, now I’ll talk about what refactoring is for anyone that doesn’t know, and why it’s important.

Code refactoring is the process of restructuring existing computer code—changing the factoring—without changing its external behavior.

When you tell your producers or lead that, it can be hard to sell them on the idea that this is a valuable endeavor. So, I actually made a slide deck going over the benefits of refactoring and giving some examples. I’ll start with a completely true example that came from ARTv2.

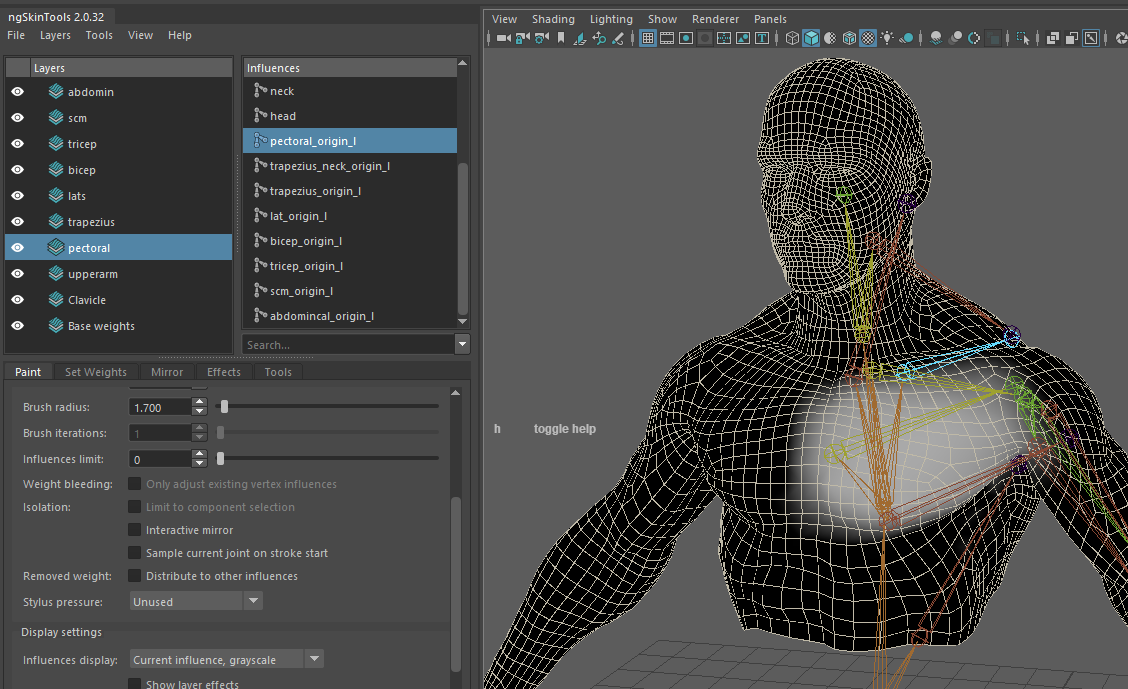

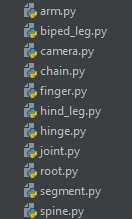

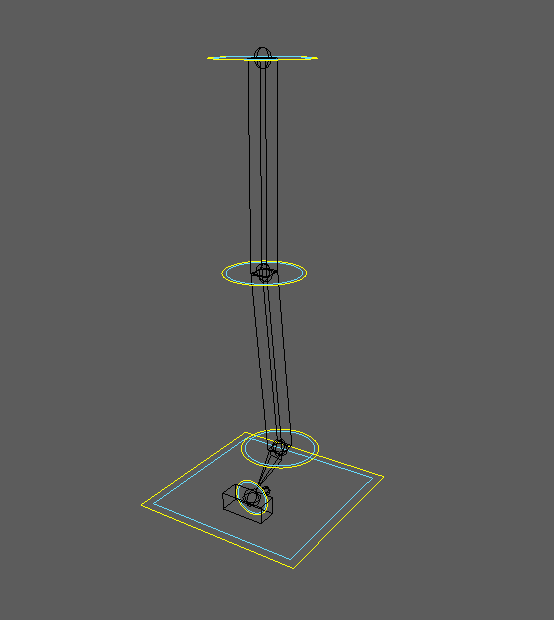

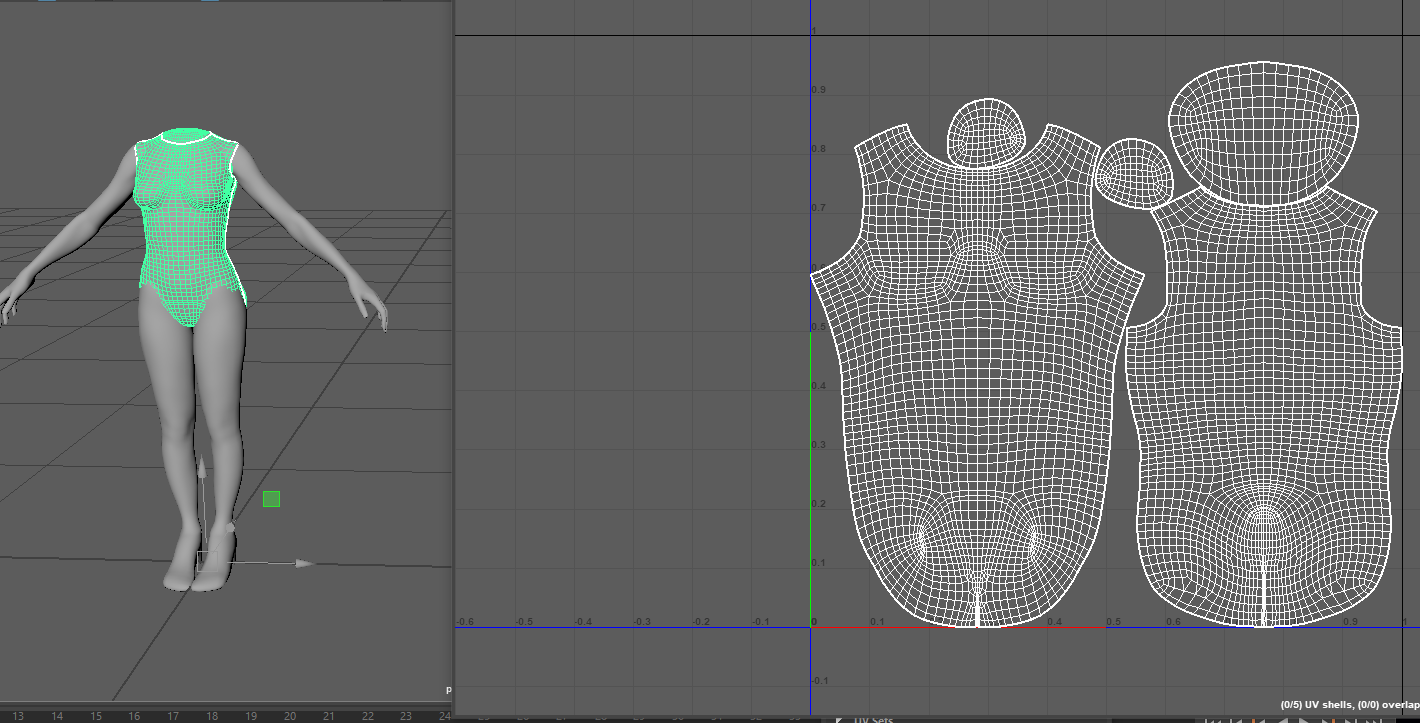

I was working on a character and a bug presented itself where joint and chain modules weren’t being parented correctly. I tracked it down and implemented a fix. A couple days later, I change the parent on one of those modules to a different joint, and the bug pops up again. I track it down and find that I had duplicated that parenting code into the change parent method. So I fix it again. Some time later, I go to create a mirror of a module, and sure enough, the bug pops up again. It also popped up when loading a template. There were four separate places where the parenting code was implemented. And this comes from the way I thought about code before.

By implementing things on a feature-to-feature approach, each feature was built as a complete tool. Each feature would have code duplicated throughout with little regard to re-use or sharing common functions. Did the code work? Sure. But as the above example points out, it makes tracking down and fixing bugs a massive pain (and it’s just sloppy). When I ran into that same bug over and over, I realized that maybe I should do a pass and clean things up.

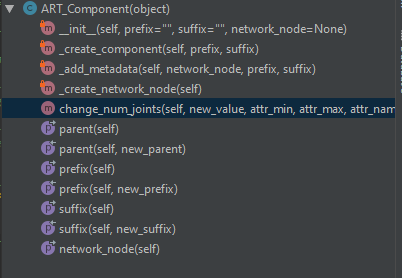

However, as I looked into it more, I realized I should just take this opportunity to really think things out and to also write unit tests as I went. If you don’t know what a unit test is, it’s basically code you write that tests code you’ve written :) A quick example would be if you had a function that took in an integer and added two to it. Your test would then call on that function with different inputs and maybe different types of inputs, and assert that your output assumptions are correct.